В современном мире грузовые автомобили играют ключевую роль в транспортировке грузов и обеспечении различных отраслей экономики необходимыми товарами и услугами. Важным аспектом их работы является…

Грузовичок

Современный мир сталкивается с серьезными вызовами в сфере изменения климатических условий, приводящими к нестабильности погоды, учащению экстремальных событий и изменениям в экосистемах. Одной из отраслей,…

Грузовые автомобили играют важную роль в международной и местной логистике, обеспечивая перевозку грузов по всему миру. Однако, как и любое другое транспортное средство, грузовики подвержены…

В мире перевозок, где дальние путешествия и продолжительные часы за рулем являются повседневностью, комфорт и безопасность в салоне грузовика играют важную роль. Удобный и функциональный…

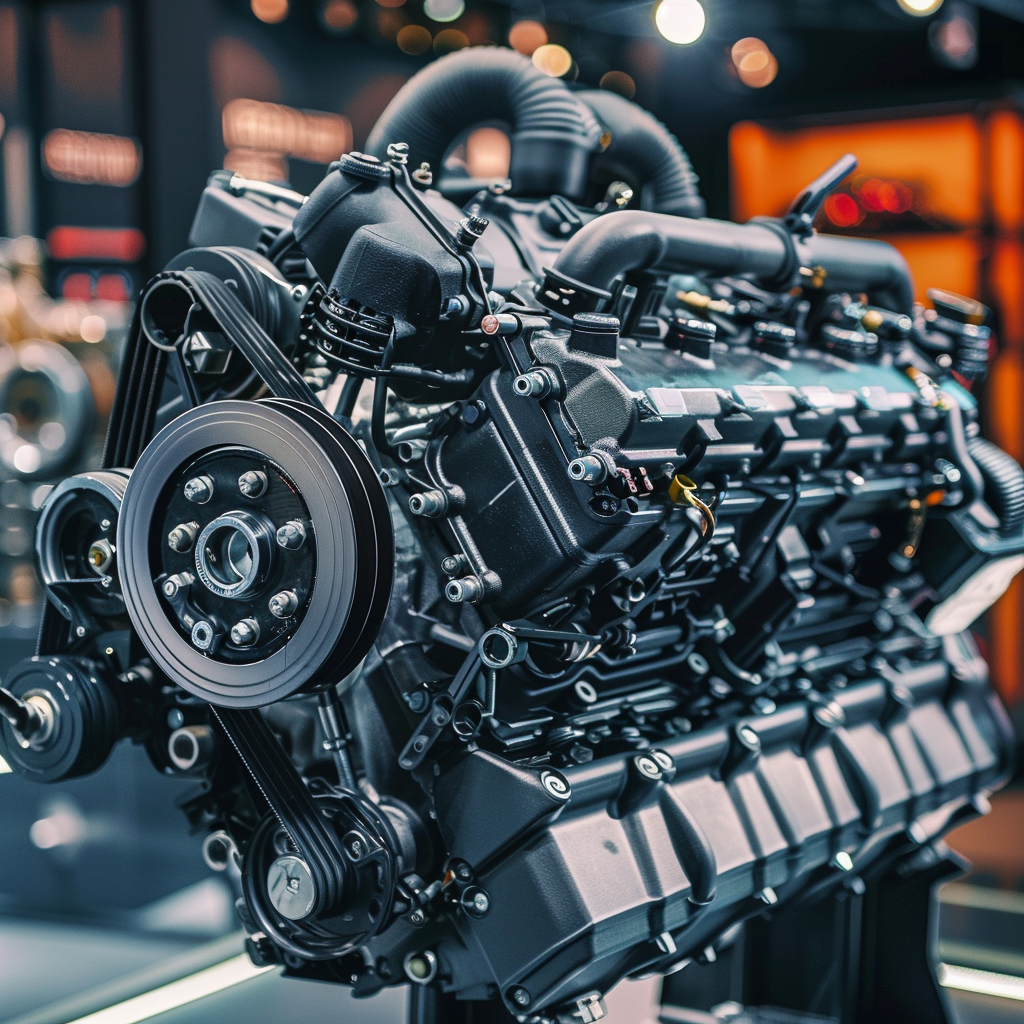

В мире автомобильной индустрии вопрос выбора между дизельными и бензиновыми двигателями является одним из ключевых. Каждый из этих типов двигателей имеет свои особенности, преимущества и…

Цены на нефть играют ключевую роль в мировой экономике, влияя на различные отрасли, включая грузоперевозочный сектор. Нефть является основным источником энергии для большинства видов транспорта,…